Improving Linguistic Data with LLMs

By dr. Slavko Žitnik and Timotej Knez

Large language models (LLMs) are revolutionising the way we access information, communicate and work. In addition to everyday applications, LLMs are also reshaping scientific fields such as language studies, humanities and social sciences. However, although their capabilities are diverse, LLMs still have their limitations: they can provide inconsistent or incorrect answers, require significant computational resources, perform poorly on less-resourced languages and struggle with tasks involving social understanding, ethics and human needs.

Addressing the Research Challenge

A promising way to improve LLM performance is to use high-quality lexicographic data. Such data can support LLM pre-training by providing both raw text and structured information, including synonymy, antonymy, hyponymy, hypernymy, meronymy, holonymy, sense distributions, idiomatic expressions and cross-linguistic distributions. Despite its potential, this rich linguistic knowledge is not yet fully utilised in existing LLMs.

By integrating this type of data into the development of LLMs, we can reduce hallucinations, improve language proficiency in complex contexts, and strengthen fine-tuning for tasks such as commonsense reasoning and natural language inference. Our project focuses on applying this approach to Slovenian — a less-resourced, morphologically rich language that lacks the digital, educational, and institutional support that global languages such as English enjoy.

Our Approach: Extracting Knowledge Graphs from Lexical Resources

We have developed a novel methodology for extracting knowledge graphs from digital linguistic databases that is tailored to morphologically complex languages. Specifically, we applied this methodology to the Digital Dictionary Database for Slovene (DDD), the largest freely accessible lexical-lexicographical resource for Slovene, and several other structured Slovene lexicographic resources.

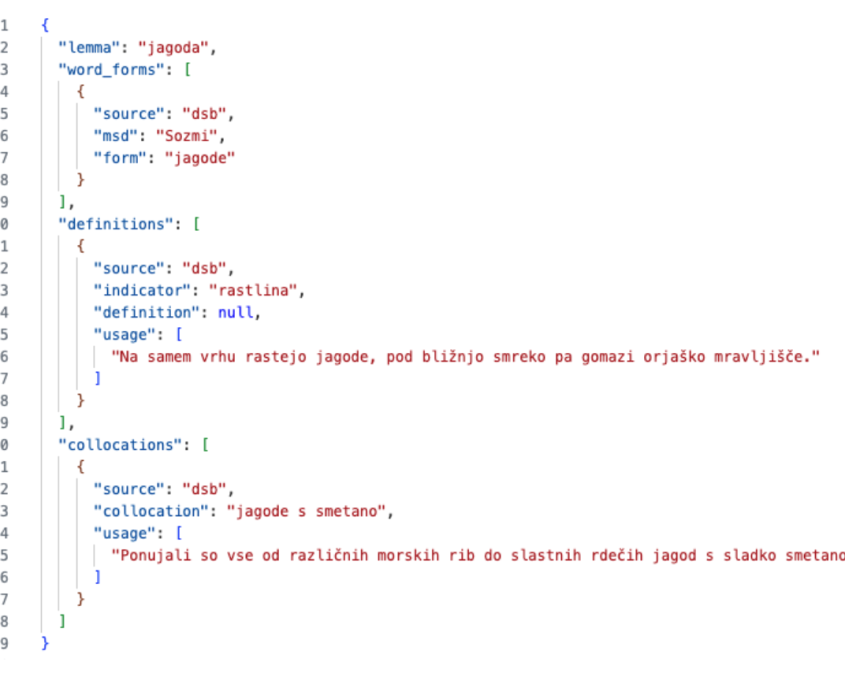

The resulting corpus comprises 356,294 words and was built from single-lexeme entries sourced from the DDD. Only individual words (no multi-word expressions) were included to ensure a clear lexical focus. For each word, all morphological forms were listed using data from DDD. Definitions of word senses were collected from multiple sources—SSKJ, sloWnet, and the Bridge Dictionary—while semantic indicators from DDD were used when full definitions weren’t available. Usage examples were included where present (from SSJK and DDD). Common collocations were added based on DDD data. Synonyms were sourced from a dedicated synonyms dictionary, often grouped by word sense and labeled with semantic indicators where possible (see Picture 1).

The corpus is saved as a structured markdown file, designed for both human readability and machine parsing and is freely available for everyone to use.

Figure 1: Snapshot of data extracted from structured resources. Example entry for word jagoda (strawberry).

This dataset opens up use cases for additional pretraining of large language models – improving lexical analysis and semantic modelling. Following the completion of this resource, the next steps of our work are already underway. An initial improved LLM will be developed by month 12, followed by a final, refined model by month 24.

Corpus Metadata

- Corpus name: Lexical LLM Pretraining Corpus

- File: D1.1.1 – pretraining corpus

- Total entries: 356,294 words

- Language: Slovene

- Sources: DDS, SSKJ, sloWnet, Bridge Dictionary, Synonyms Dictionary

- Content: Lemmas, forms, senses, definitions or indicators, examples, collocations, synonyms

- Format: Markdown, structured with separators

Use cases: LLM pretraining, lexical analysis, semantic modeling

Citation:

Žitnik, S. and Knez, T. (2025). Improving Linguistic Data with LLMs. Zenodo. https://doi.org/10.5281/zenodo.15878672